In a previous blog article, I shared my experience with Camunda 7 transactions by focusing on Asynchronous Continuations (AC's) and how they impact the observed behavior of a process, especially in failure scenarios (link).

This article further deepens the understanding of Camunda's transaction handling by analyzing multiple message correlation Scenarios especially with consideration to concurrency and optimistic locking.

Optimistic Locking

To ensure a consistent state for process instances, only one thread is permitted to change the state of an execution at a time. But because collisions are expected to be rare, Camunda does not use conventional locking to improve the performance of the engine. Instead, Optimistic Locking is used. This means, that rather than obtaining a lock on database rows at the beginning of transactions, only when a transaction is committed a validation is performed to ensure no inconsistencies can occur. In case an entity was updated concurrently, the transaction is not committed successfully but rolled back and an exception is thrown. As a client that receives an optimistic locking exception, generally it's enough to retry the request but this comes with performance deficits as well as increased complexity on client side, which is why, if possible, these situations should be prevented.

Intermediate Message Catch Events

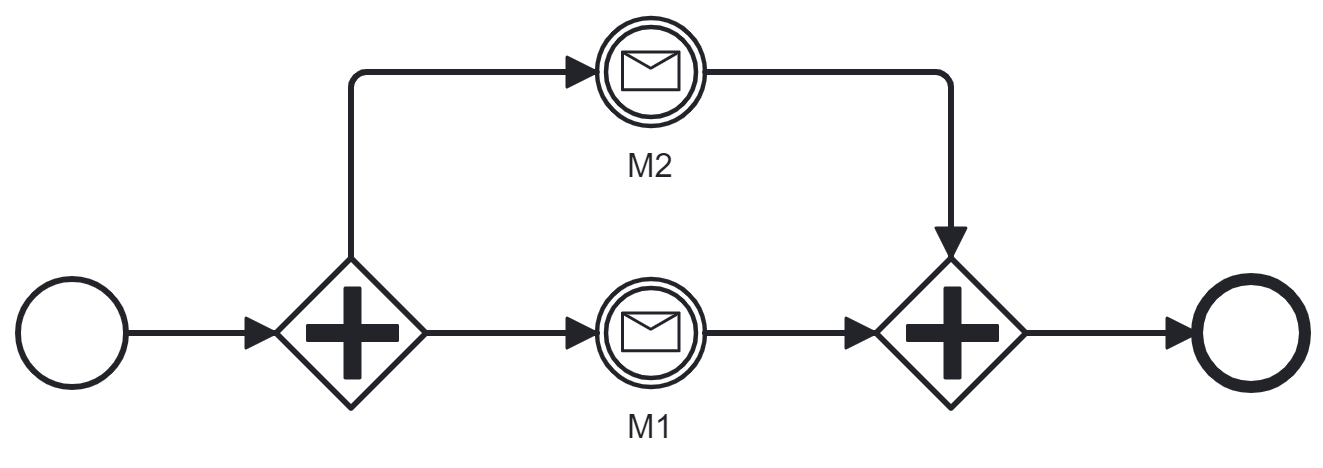

To start off, I used the following process model to understand how

concurrent message correlation works for intermediate catch events.

No Asynchronous Continuations's

In the first Scenario, no AC's are configured in the process. The Process is started and now waiting at the two Message Events. In the Camunda database, we can see 5 executions, let's call them A (process instance) B, B', C and C'. The following log statements show what might happen if both messages are correlated concurrently. The logs are filtered and simplified to only show the most important actions.

Thread 1: Start CorrelateMessageCmd

Thread 2: Start CorrelateMessageCmd

Thread 1: selectExecution (id = B)

Thread 1: selectExecution (id = B')

Thread 1: selectExecution (id = A)

Thread 2: selectExecution (id = C)

Thread 2: selectExecution (id = C')

Thread 2: selectExecution (id = A)

Thread 1: deleteEventSubscription

Thread 1: updateExecution (id = B)

Thread 1: updateExecution (id = A)

Thread 1: deleteExecution (id = B')

Thread 2: deleteEventSubscription

Thread 2: updateExecution (id = C)

Thread 2: updateExecution (id = A)

Thread 2: deleteExecution (id = C')

Thread 1: Finish CorrelateMessageCmd

Thread 2: Finish CorrelateMessageCmd

!OptimisticLockingException!

Note that even if the database statements would be performed, the state of the instance would not be as expected, as there are still 3 executions left (while we would expect the instance to be completed). This is because both message correlations acted on the assumption, that they are the first to arrive at the parallel join. Retrying the failed message correlation would fix this problem, because now the other execution has already reached the join.

AC-After on the Message Events:

In the next scenario, i configured AC-After on both Message Catch Events.

Thread 1: Start CorrelateMessageCmd

Thread 2: Start CorrelateMessageCmd

Thread 1: selectExecution (id = B)

Thread 1: selectExecution (id = B')

Thread 1: selectExecution (id = A)

Thread 2: selectExecution (id = C)

Thread 2: selectExecution (id = C')

Thread 2: selectExecution (id = A)

Thread 1: deleteEventSubscription

Thread 1: updateExecution (id = B)

Thread 1: deleteExecution (id = B')

Thread 2: deleteEventSubscription

Thread 2: updateExecution (id = C)

Thread 2: deleteExecution (id = C')

Thread 1: Finish CorrelateMessageCmd

Thread 2: Finish CorrelateMessageCmd

Thread 3 (TaskExecutor): Start ExecuteJobsCmd

Thread 3 (TaskExecutor): selectExecution (id = A)

Thread 3 (TaskExecutor): selectExecution (id = B)

Thread 3 (TaskExecutor): updateExecution (id = A)

Thread 3 (TaskExecutor): updateExecution (id = B)

Thread 3 (TaskExecutor): Finish ExecuteJobsCmd

Thread 3 (TaskExecutor): Start ExecuteJobsCmd

Thread 3 (TaskExecutor): selectExecution (id = A)

Thread 3 (TaskExecutor): selectExecution (id = B)

Thread 3 (TaskExecutor): selectExecution (id = C)

Thread 3 (TaskExecutor): deleteExecution (id = A)

Thread 3 (TaskExecutor): deleteExecution (id = B)

Thread 3 (TaskExecutor): deleteExecution (id = C)

Thread 3 (TaskExecutor): Finish ExecuteJobsCmd

Note that all executions are deleted at the end. The job executor took over after the Message Events and finished the process instance. No optimistic locking exception occurred, as Thread 1 & 2 did not try to update execution entity A.

Non Interrupting Message Boundary Events

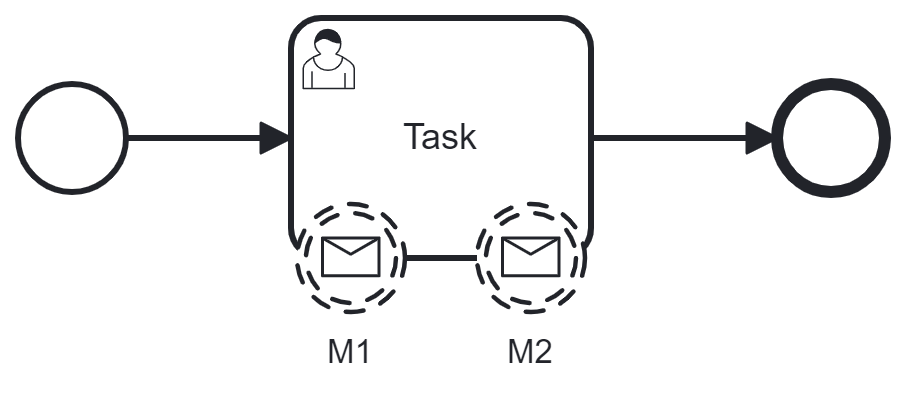

Interestingly the behavior is different for Boundary Events. The next

Scenarios show the pitfalls one should consider when using these,

especially when multiple messages are expected in quick succession or

even concurrently.

The diagram above shows the simple model that is used. While it is not conform to most modeling guidelines, I did not add end events after the Message Boundary Events to show that the behavior is observable with this minimum model.

Again, the process is started and in this case, the Camunda database only shows 2 executions (A and B). No Executions are created for the boundary events. To show, what happens if messages are correlated, I consider three scenarios.

No AC's One Message Correlated

To understand the more complex scenarios, let me first show a single message correlation.

Thread 1: Start CorrelateMessageCmd

Thread 1: selectExecution (id = A)

Thread 1: updateExecution (id = A)

Thread 1: Finish CorrelateMessageCmd

Note, that no new executions are created, but the process instance execution is updated.

No AC's Two Messages Concurrent Correlation

As you can imagine correlating both messages concurrently can be troublesome, as both transactions modify the same execution entity.

Thread 1: Start CorrelateMessageCmd

Thread 2: Start CorrelateMessageCmd

Thread 1: selectExecution (id = A)

Thread 2: selectExecution (id = A)

Thread 1: updateExecution (id = A)

Thread 2: updateExecution (id = A)

Thread 1: Finish CorrelateMessageCmd

Thread 2: Finish CorrelateMessageCmd !OptimisticLockingException!

Two Messages Sequential Correlation with AC's

In the parallel gateway example AC's helped to prevent the optimistic locking exception. To test, if this is also true for boundary events, I set AC after on the M1 Message Event.

Thread 1: Start CorrelateMessageCmd

Thread 1: selectExecution (id = A)

Thread 1: insertExecution (id = B)

Thread 1: insertExecution (id = B')

Thread 1: updateExecution (id = A)

Thread 1: updateExecution (id = B)

Thread 1: Finish CorrelateMessageCmd

Thread 2 (TaskExecutor): selectExecution (id = A)

Thread 2 (TaskExecutor): selectExecution (id = B)

Thread 2 (TaskExecutor): selectExecution (id = B')

Thread 3: Start CorrelateMessageCmd

Thread 3: selectExecution (id = A)

Thread 2 (TaskExecutor): updateExecution (id = A)

Thread 2 (TaskExecutor): updateExecution (id = B')

Thread 2 (TaskExecutor): deleteExecution (id = B)

Thread 2 (TaskExecutor): deleteExecution (id = B')

Thread 3: updateExecution (id = A)

Thread 3: Finish CorrelateMessageCmd

!OptimisticLockingException!

Clearly, the AC did not help prevent the optimistic locking exception. In fact, it even made the problem worse, as sequential correlation of the messages would not have been a problem without the AC, but due to the asynchronous nature of the job executor, the chance of concurrent updates to the process instance execution entity is there even if the messages are correlated sequentially.

Conclusion

The main takeaway is again, that the behavior of Camunda is not immediately obvious by having a look at the process. Transaction boundaries and concurrency play an important role! But there is hope. By analyzing the debug logs, one can understand very clearly why an exception is thrown in certain scenarios and more often than not, optimistic locking exceptions can be prevented by cautiously modeling the process and knowing when requests can be concurrent.

|

<<< Previous Blog Post English or German - Which Language to use in Source Code? |

Next Blog Post >>> Why you will make breaking changes to your API |